Hi, I used spider to get 10 000 urls with interesting information on a paying site where I have user id and a password. When I use Xpathonurl, it gives me some answers but both the mail and the phone are replaced by "access for user". How can I get the mail and the phone, when I already have the address and other non strategic info ?

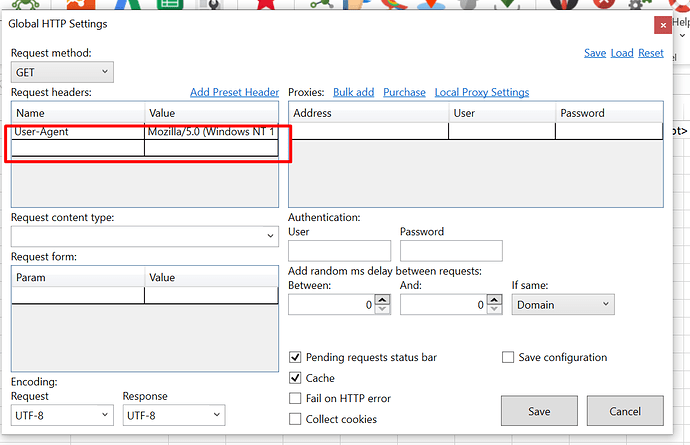

Is there a place where I could provide the User Id nd the password ?

Or is it impossible ?

Thanks .