I am new to SeoTools. Using the link: https://www.theweathernetwork.com/ca/hourly-weather-forecast/british-columbia/prince-george I would like to get data from the table marked "Hourly" placed in individual cells in excel.

The XPath for one of the elements of the table is:

id('hourly-weather-forecast')/x:div[1]/x:div[4]

From reading other comments my understanding is that the format should be converted to something like this:

"//*[@id='hourly-weather-forecast']/div[1]/div[4]"

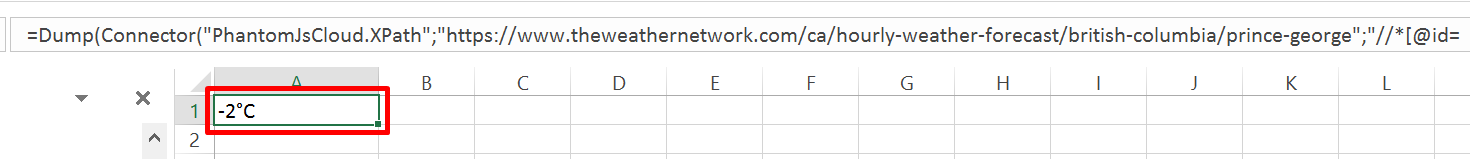

Giving the equation:

=XPathOnUrl("https://www.theweathernetwork.com/ca/hourly-weather-forecast/british-columbia/prince-george","//*[@id='hourly-weather-forecast']/div[1]/div[4]")

however, I only seem able to get a blank space.

Any help on this would be appreciated!

Thanks!