Hello dear community,

I am extracting data using crawler from around 40k links.

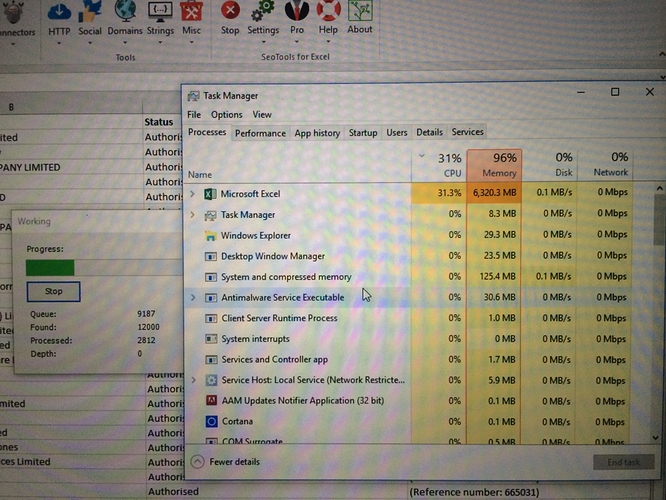

The crawler starts off correctly pulling data from each link in around 2-3 seconds.. but after it's been running for around 10 hours, my RAM is being used at over 6gb and it's only extracted around 2.8k links.

Heres a picture:

Is there a way to clear it's memory or doing it in batches?